This is a live stream of an ongoing software development project focused on real-time sonification of environmental data.

The “wlan” of wlanMusic reflects some of the samples and synths are triggered by sniffed WiFi packets. The rate of WiFi activity may also determine the tempo and note duration of synchronized instruments.

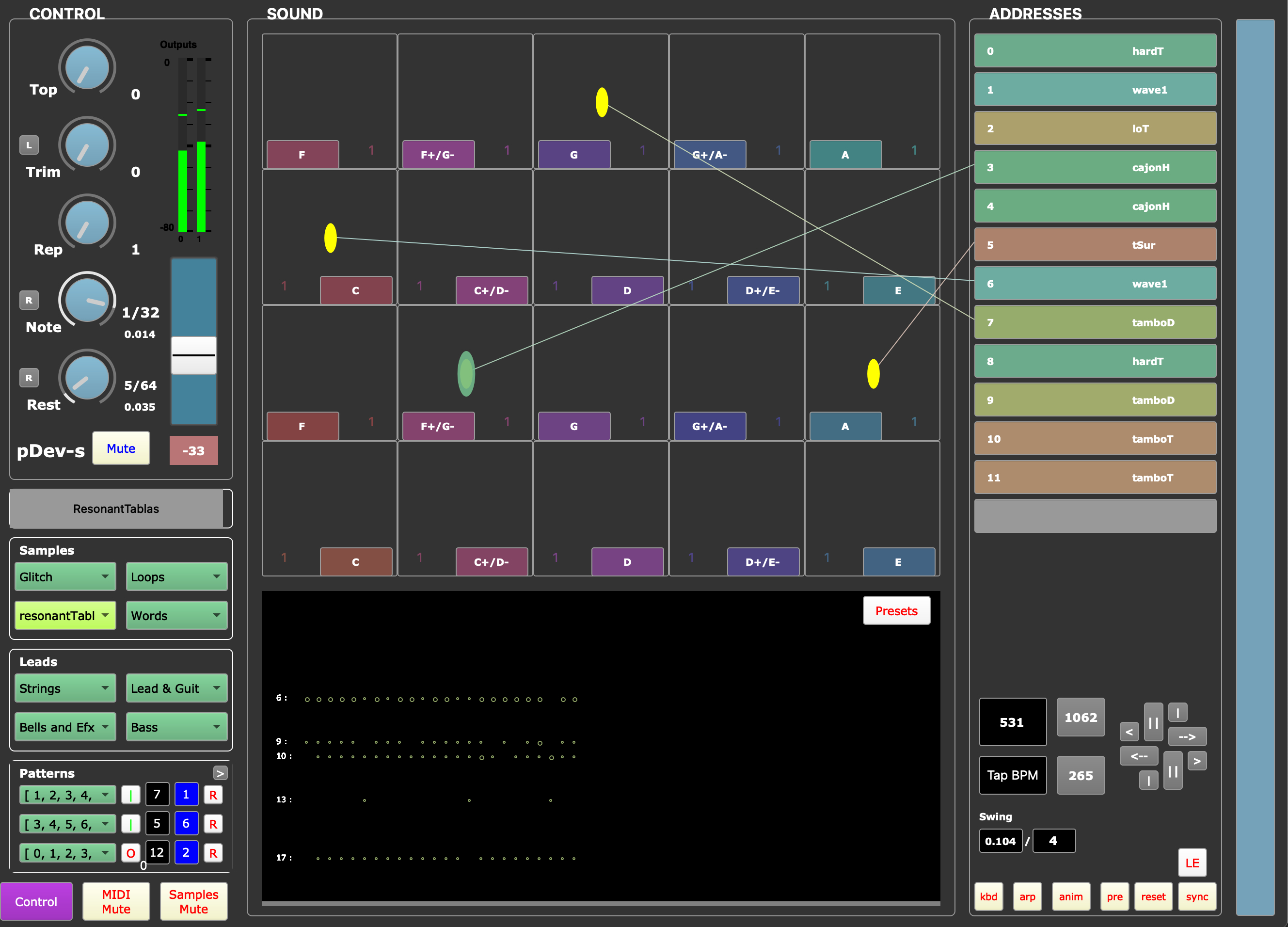

The software is written in SuperCollider and runs on raspberry Pi and Apple hardware. A complementary sequencer synchronizes with the sonification data stream, triggering also samples and synthesizers. Mixdown is made in Ableton Live before upload to this Icecast server for broadcast.

WiFi Player (2024/10)

Sequencer (2024/10)

News: (07/14) Owing to intractable MacOS/software malfunctions outside of user control, this site switched almost entirely to hardware/analog sound production. The software analyses WiFi activity to determine tempo for system sequencers, sending also via MIDI pitch and other program information to hardware devices such as Moog Subharmonicon, Elektron Digitone and modular configurations, mixed by hardware mixers (instead of Ableton Live, as before the malfunctioning) before streaming to this server. A looping guitar track might also contribute to the usual, overwhelming density of sound.

New also is the development of a programmatic means of creating titled audio tracks on the fly. As a desired sound is achieved, an inspired title for the track may be entered in GNU Emacs to trigger recording of 10 minutes of the audio stream. This is automatically saved to an index of recordings, which are reloaded into the ongoing loop of recordings playing in the More section of the front page of this site.